Stay Informed

Join our newsletter to receive updates about our mission, impact stories, and ways you can help make a difference.

The clock is ticking. Every day we wait to address AI-generated child sexual abuse material is another day predators get ahead of law enforcement.

OpenAI recently announced that ChatGPT will permit erotic content for age-verified adults. The company has framed this decision as a way of respecting adult autonomy. But this decision, opens a floodgate that could accelerate one of the most urgent threats facing children today: the explosion of AI-generated child sexual abuse material (AI-CSAM).

The numbers are already terrifying. And they're getting worse by the day.

The Crisis Is Already Here!

This isn't a hypothetical future threat. Here are the facts and figures! According to the internet Watch Foundation (IWF) July 2024 report, over 3,500 new AI-generated child sexual abuse images appeared on a single dark web forum between 2023 and 2024. The most concerning aspect of this discovery is that the images are becoming more severe, with more depicting the most extreme Category A abuse.

In 2024 Reports of AI-generated CSAM surged 380% from 51 confirmed reports in 2023 to 245 in 2024. Each report can contain thousands of individual images. The National Center for Missing & Exploited Children received 67,000 reports of AI-generated CSAM in all of 2024 then received 485,000 reports in just the first half of 2025. That's a 624% increase in six months.

This is a major concern as Law enforcement can no longer tell what's real. In 193 of 245 reports investigated, AI-generated images were so realistic they had to be treated as if they depicted actual children being raped. The problem is that it’s difficult to justify prosecuting those who create these images, since they don’t involve real people but are instead artificial depictions.

We are losing this fight. And loosening restrictions on sexual AI content will make it exponentially worse.

The Human Cost of Delay

These aren't just statistics. Real predators are already weaponizing AI to abuse children:

Hugh Nelson (UK, 2024)

A British man was sentenced to over 20 years in prison for using AI face-transformation software to convert normal photographs of real children into graphic sexual abuse imagery. He then sold albums of these images in online chat rooms on the open web. Nelson targeted real children turning innocent photos into nightmare fuel that will haunt those victims forever.

Steven Andereg (Wisconsin, 2025)

The first federal prosecution for creating CSAM entirely through AI. Anderegg faces charges for producing images that never depicted a real child yet prosecutors argue (correctly) that such material still harms children by normalizing abuse and fuelling demand for exploitation.

Europol Operation (February 2025)

Dozens arrested across Europe after a Danish man allegedly ran a paid platform distributing fully AI-generated child sexual abuse material. 273 suspects identified. Users paid to access content showing "children being abused." The platform's administrator allegedly produced the content using AI tools.

California's Eight Lost Cases

Between December 2024 and September 2025, prosecutors in Ventura County, California, had to drop eight cases involving AI-generated CSAM because state law required proving the imagery depicted a real child. Eight predators walked free because the law couldn't keep up with technology.

Kaylin Hayman (Disney Channel Actress)

A 17-year-old actress from Disney's "Just Roll with It" became a victim of AI-generated "deepfake" sexual imagery. "I felt like a part of me had been taken away," she said. "Even though I was not physically violated." Her advocacy helped pass California's updated law — but millions of children remain unprotected.

Why Allowing Adult Erotica Makes the Child Abuse Problem Worse

When AI systems are trained and optimized to produce sexual content for adults, five devastating side effects follow:

1. The Supply Explodes

Models designed to generate adult sexual content can be manipulated through clever prompts, jailbreaks, or outright abuse to produce sexualized images of minors. Even with filters, bad actors find workarounds. The technical capability to create erotica for adults is the same capability that creates AI-CSAM.

2. Synthetic Abuse Is Real Abuse

A dangerous myth persists: "It's not real, so it's not harmful." This is false.

AI-CSAM normalizes the sexual abuse of children

It can be used as a grooming tool to desensitize real children

It re-traumatizes survivors who see that society is creating new ways to depict their abuse

It trains and rehearses predators, lowering their inhibitions

Studies show it can stimulate offending behavior

Every child-safety organization on Earth agrees: synthetic child sexual abuse material is abuse material, full stop.

3. Age Verification Won't Save Us

OpenAI says adult content will be "age-gated," but:

Age verification systems can be spoofed or bypassed

They create new privacy and data security risks

They can't stop verified adults from creating and distributing AI-CSAM

Even Disney Channel actresses get deepfaked verification doesn't protect victims

4. Detection Systems Are Already Overwhelmed

Traditional child-protection tools rely on "hash matching" comparing new images to databases of known abuse. AI-generated content bypasses these systems entirely because every image is new and unique.

Law enforcement is drowning:

98% of AI-generated CSAM depicts girls amplifying violence against women and girls

Investigators waste time trying to identify and rescue children who don't exist

The sheer volume threatens to collapse detection and reporting systems

"We're playing catch-up to a technology that is moving far faster than we are," says California DA Erik Nasarenko.

5. Grooming and Coercion Get an AI Upgrade

Sexual chatbots can be weaponized as grooming tools:

Predators can role-play abusive scenarios and refine their tactics

AI can generate personalized sexual content featuring a specific child's likeness

Chatbots can be used to normalize inappropriate relationships and boundary violations

The line between fantasy and action blurs when AI makes abuse "feel" accessible

What Must Happen Right Now

Every day we delay, predators generate thousands more images. Here's what needs to happen immediately:

1. Legal Clarity: All AI-CSAM Is CSAM

an AI-CSAM Is child sexual abuse material generated by AI

Every jurisdiction must unambiguously criminalize AI-generated child sexual abuse material whether it depicts a real child or not, whether it's photorealistic or cartoon-like. The law must catch up to the technology now. As of late 2025, many U.S. states still have legal gaps. Children cannot wait for lawmakers to feel comfortable.

2. Strong Age Verification

Age verification should be robust, privacy-respecting, and independently audited. But it's only a speed bump and not a permanent solution. Verified adults can still commit crimes. We need multiple layers of protection, not a single gate.

3. Mandatory Watermarking and Provenance

Every AI-generated image should carry tamper-resistant metadata or forensic watermarks. This won't stop abuse, but it will make it harder to deny and easier to trace. Investigators need tools to distinguish real photos from AI creations and to track who made what.

4. Next-Generation Detection, Funded Now

Traditional hash-matching is useless against novel AI content. We need machine learning systems that can detect patterns, styles, and content in AI-generated imagery. This technology exists. It needs funding, deployment, and mandatory use by platforms.

5. Mandatory Reporting and Transparency

Platforms must report suspected CSAM and AI-CSAM to national hotlines (like the National Center for Missing & Exploited Children in the U.S.). They must share threat intelligence with law enforcement. And they must publish transparency reports showing how much abuse they detected, how much they missed, and what they did about it.

6. Fund Victim Support and Civil Society

Survivors need resources. Advocacy groups need funding. Researchers need access. We cannot leave child protection to tech companies and law enforcement alone. Civil society must have a seat at the table and the resources to fight back.

The Bottom Line: This Is an Emergency

Treating adults like adults is a reasonable principle but not when the tool in question is a machine that can generate infinite child sexual abuse material on demand.

OpenAI and every other AI company must understand: loosening restrictions on sexual content without ironclad safeguards is reckless. We are at a fork in the road. We can act now with clear laws, strong safeguards, mandatory detection, and real consequences or we can watch as AI becomes the greatest accelerant of child sexual abuse in history.

There is no middle ground. The time to act is now.

For more information, see:

Internet Watch Foundation: IWF AI-CSAM Research

National Center for Missing & Exploited Children: NCMEC

ECPAT International: ECPAT

U.S. Department of Justice Child Exploitation Section: DOJ

References

News & Policy Sources

Reuters. (2025, October 14). OpenAI to allow mature content on ChatGPT for adult verified users starting December 2025. Retrieved from https://www.reuters.com/business/openai-allow-mature-content-chatgpt-adult-verified-users-starting-december-2025-10-14

The Verge. (2025, October 15). Sam Altman says ChatGPT will soon sext with verified adults. Retrieved from https://www.theverge.com/news/799312/openai-chatgpt-erotica-sam-altman-verified-adults

Ars Technica. (2025, February). ChatGPT can now write erotica as OpenAI eases up on AI paternalism. Retrieved from https://arstechnica.com/ai/2025/02/chatgpt-can-now-write-erotica-as-openai-eases-up-on-ai-paternalism

National Center for Missing & Exploited Children (NCMEC). (2025). CyberTipline Data Report, First Half 2025. Alexandria, VA: NCMEC.

https://www.missingkids.org/ncmec-data-reports

Internet Watch Foundation (IWF). (2025). Annual Report 2024–2025: AI-Generated Child Sexual Abuse Imagery. Cambridge, UK: IWF.

https://www.iwf.org.uk/report/2024

Europol. (2025, February). Press release: Major European operation targets creators and distributors of AI-generated child sexual abuse material. The Hague: Europol.

https://www.europol.europa.eu/media-press/newsroom

BBC News. (2024, September 9). Man jailed for using AI to create child abuse images. London: BBC.

https://www.bbc.com/news/technology

U.S. Department of Justice. (2025, March 12). Wisconsin man indicted for creating child sexual abuse material using artificial intelligence. Washington, DC: DOJ Press Release.

https://www.justice.gov/opa/pr

Ventura County District Attorney’s Office. (2025, September). AI-generated child sexual abuse cases dismissed due to legal gaps. Ventura, CA: Press Statement.

Los Angeles Times. (2025, March 3). Disney Channel actress Kaylin Hayman speaks out on deepfake exploitation, calls for stronger AI laws. Los Angeles: LA Times.

Thorn. (2024). The Rise of AI-Generated Child Sexual Abuse Material and the Urgent Need for Detection Standards. Los Angeles: Thorn.

https://www.thorn.org/blog/ai-csam-detection-standards

WeProtect Global Alliance. (2024). Artificial Intelligence and Child Sexual Abuse Material: Emerging Risks and Policy Responses. London: WeProtect Foundation.

https://www.weprotect.org/library

ECPAT International. (2025). AI and Online Child Sexual Exploitation: Global Threat Assessment 2025. Bangkok: ECPAT.

https://www.ecpat.org/resources

United Nations Office on Drugs and Crime (UNODC). (2024). Global Report on Online Child Sexual Exploitation 2024. Vienna: UN Publications.

California District Attorneys Association. (2025, August). Statement on AI-Generated Child Sexual Abuse Material and Legislative Reform Needs. Sacramento, CA: CDAA.

Nasarenko, E. (2025, September). Interview with ABC7 Los Angeles on AI-CSAM and prosecutorial challenges. Retrieved from https://abc7.com

Hotlines serve as a pivotal resource for individuals seeking immediate assistance across various sectors. Their effectiveness hinges on accessibility, trained personnel, and efficient call management systems

the ethics surrounding the surveying of child abuse necessitate a careful balance between the need for data collection and the imperative of safeguarding vulnerable populations. Ethical considerations must prioritize the well-being of children, ensuring that their rights and dignity are upheld throughout the research process.

Combating child trafficking requires collective action. Organizations like ECPAT International, UNICEF, and the National Human Trafficking Hotline coordinate rescue efforts and survivor support across borders. Tech companies are implementing stronger safeguards, using machine learning to detect and remove exploitative content.

The intersection of technology and social justice has never been more critical. As AI continues to evolve, its capabilities in detecting and combating smuggling and human trafficking will further strengthen law enforcement and humanitarian efforts.

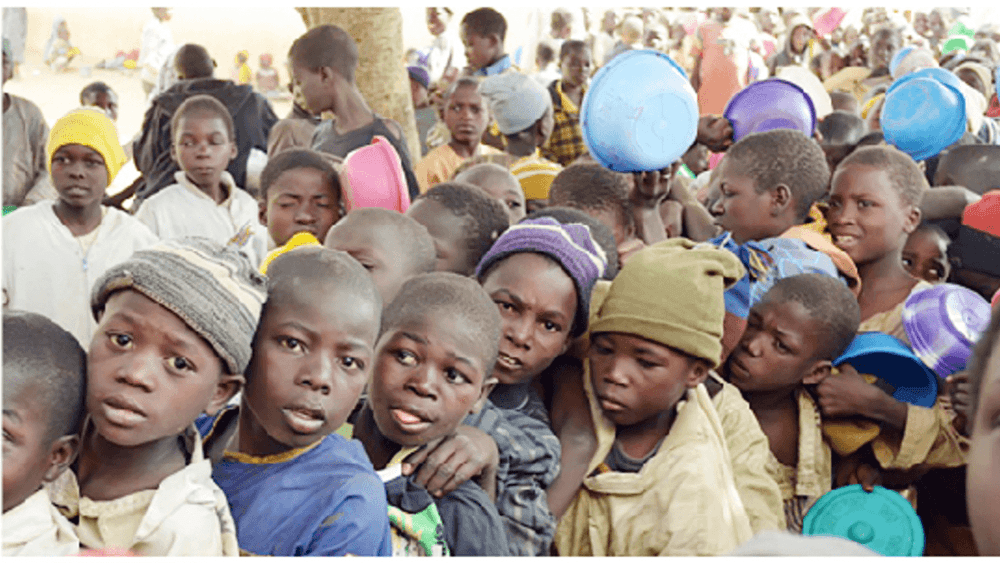

The Almajiri system began as a noble path of learning but has been weakened by neglect and lack of support. Today, millions of children suffer under conditions that deny them their rights and their futures

tackling child abuse in South Africa requires a multifaceted approach that involves individuals, families, communities, and the government. It calls for increased awareness, education, and active participation from all sectors of society.

The fight against child labor in Europe began in earnest during the 19th century. Social reformers, writers, and activists highlighted the plight of children working in hazardous conditions

Child sexual exploitation can be prevented through education, vigilance, and strong legal action. Key steps include teaching children body safety, monitoring online activity, reporting abuse, and supporting survivors. Community awareness and trained professionals are essential to detect and stop exploitation early.

Online gaming platforms have become a significant avenue for child sexual exploitation, with predators using in-game communication features, virtual gifts, and mentorship tactics to groom children in as little as 19 seconds, making parental awareness and active engagement in gaming crucial for protection.

Marianco is a non-profit organization dedicated to combating child trafficking, pedophilia, and exploitation. Inspired by Francisco Padilla, a former street orphan, Marianco provides comprehensive support to vulnerable children, including shelter, education, healthcare, and counseling. The organization aims to create a world where every child is safe and has the opportunity to reach their full potential. Child trafficking, pedophilia, and related forms of exploitation are pressing issues that strip millions of children of their rights, safety, and dignity. Marianco recognizes the urgency of addressing this crisis and is committed to disrupting cycles of exploitation by raising awareness, advocating for stricter laws, and creating prevention and rehabilitation programs. Their short-term goals include raising awareness, building partnerships, fundraising, developing rehabilitation centers, offering legal aid, and creating educational programs. Marianco is preparing to launch an advocacy campaign to educate communities about child trafficking and how to prevent it. With your support, Marianco can turn these plans into reality and create a world where children are safe, loved, and empowered.

Join our newsletter to receive updates about our mission, impact stories, and ways you can help make a difference.